On-Device ML for LLMs: Post-Training Optimization Techniques with T5 and Beyond

Description

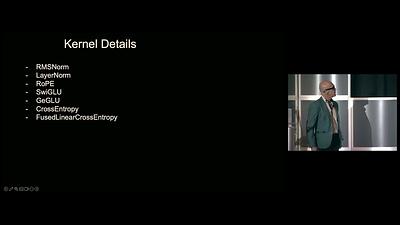

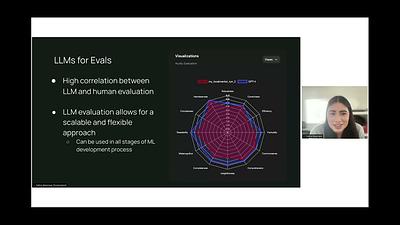

This session explores the practical aspects of implementing Large Language Models (LLMs) on devices, focusing on models such as T5 and its modern variations. Deploying ML models on devices presents significant challenges due to limited computational resources and power constraints. However, On-Device ML is crucial as it reduces dependency on cloud services, enhances privacy, and lowers latency. Optimizing LLMs for on-device deployment requires advanced techniques to balance performance and efficiency. Grammarly is at the forefront of On-Device ML, continuously innovating to deliver high-quality language tools. This presentation offers valuable insights for anyone interested in the practical implementation of on-device machine learning using LLMs, drawing on Grammarly's industry application insights. Topics covered include techniques for optimizing performance and reducing inference latency (e.g., Quantization, Pruning, Layer Fusion), methods to develop efficient and scalable AI solutions on edge devices, and addressing common challenges in deploying LLMs to edge devices.