Memory Optimizations for Machine Learning

December 05, 2024

32 min

Free

model-pruning

neural-networks

cpu

data-quantization

machine-learning

llm

memory-optimization

quantization

inference

deep-learning

transformer-models

gpu

Description

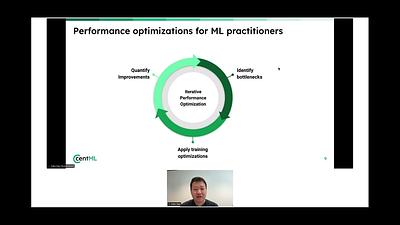

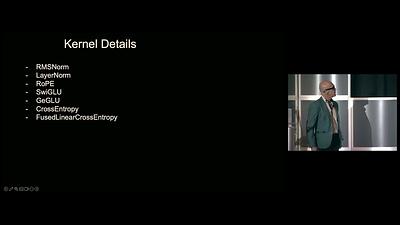

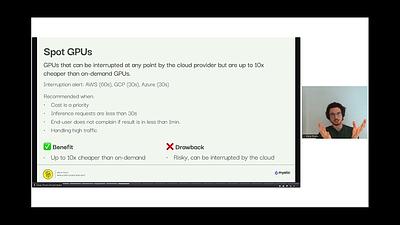

This talk explores memory optimization strategies crucial for deploying Machine Learning models, especially Large Language Models (LLMs). It delves into the memory footprint of ML data structures and algorithms, detailing techniques like data quantization and model pruning. A significant focus is placed on optimizing LLM inferencing, discussing factors affecting their memory usage and practical strategies for memory conservation without compromising performance. The presentation also touches upon hardware considerations and real-world examples of memory optimization in ML.