Lessons learned from scaling large language models in production

May 16, 2024

41 min

Free

ray-serve

large-language-models

llm

rag

mlops

gpu

performance-optimization

inference

scaling

python

fastapi

kubernetes

vm

vector-database

Description

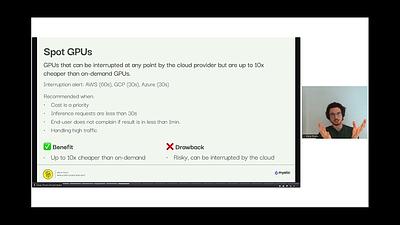

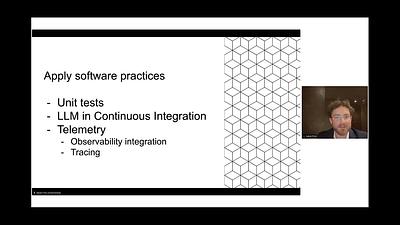

Open source models have made running your own LLM accessible many people. It's pretty straightforward to set up a model like Mistral, with a vector database, and build your own RAG application. But making it scale to high traffic demands is another story. LLM inference itself is slow, and GPUs are expensive, so we can't simply throw hardware at the problem. Once you add things like guardrails to your application, latencies compound. In this talk, Matt Squire will share the lessons learned from experience building and running LLMs for customers at scale. Using real code examples, he'll cover performance profiling, getting the most out of GPUs, and interactions with guardrails.