Investigating the Evolution of Evaluation from Model Training to GenAI Inference

Description

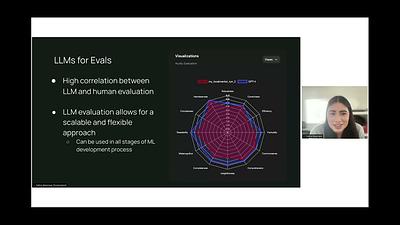

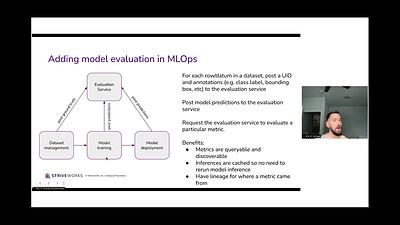

This session explores the evolution of evaluation techniques in machine learning, from traditional model training through fine-tuning to the current challenges of assessing large language models (LLMs) and generative AI systems. We'll trace the progression from simple metrics like accuracy and F1 score to sophisticated automated evaluation systems that can generate criteria and assertions. The session will culminate in an in-depth look at cutting-edge approaches like EvalGen, which use LLMs to assist in creating aligned evaluation criteria while addressing phenomena like criteria drift.

About the Speaker: Anish Shah is a leading expert in AI and ML at Weights & Biases, specializing in the optimization of large language models for complex applications. With extensive experience in fine-tuning and model evaluation, Anish has contributed to significant advancements in the field of AI research and presented at many conferences and events.