From Idea to Production: AI Infra for Scaling LLM Apps

May 16, 2024

39 min

Free

llm

ai

ai-infrastructure

llm-ops

prompt-engineering

model-deployment

gpu

data-pipelines

rag

cost-optimization

generative-ai

llm-applications

Description

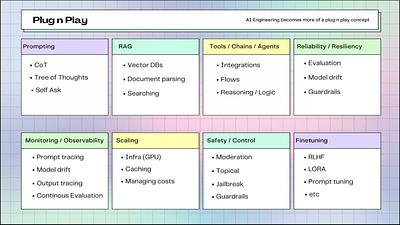

AI applications must adapt to new models, evolving workflows, and complex debugging challenges. This talk addresses the critical AI infrastructure needed to scale LLM applications from beta to production. It covers prompt management, data pipelines, Retrieval Augmented Generation (RAG), cost optimization, and GPU availability. Join Guy Eshet to explore strategies for building adaptability into LLM applications, focusing on addressing the challenges of building Generative AI and LLM apps, designing for adaptability, and preparing applications for future model advancements.