Evaluation Techniques for Large Language Models

Description

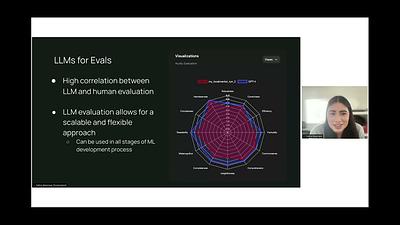

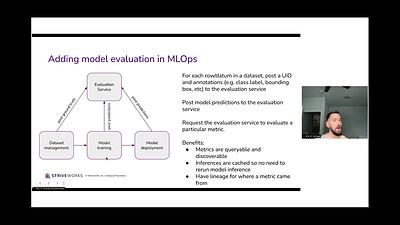

Large language models (LLMs) represent an exciting trend in AI, with many new commercial and open-source models released recently. However, selecting the right LLM for your needs has become increasingly complex. This tutorial provides data scientists and machine learning engineers with practical tools and best practices for evaluating and choosing LLMs. The tutorial will cover the existing research on the capabilities of LLMs versus small traditional ML models. If an LLM is the best solution, the tutorial covers several techniques, including evaluation suites like the EleutherAI Harness, head-to-head competition approaches, and using LLMs for evaluating other LLMs. The tutorial will also touch on subtle factors that affect evaluation, including role of prompts, tokenization, and requirements for factual accuracy. Finally, a discussion of model bias and ethics will be integrated into the working examples. Attendees will gain an in-depth understanding of LLM evaluation tradeoffs and methods. Jupyter Notebooks will provide reusable code for each technique discussed.