Efficiently Fine-Tune And Serve Your Own LLMs

Description

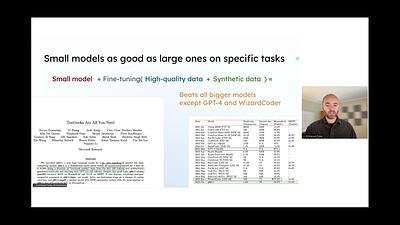

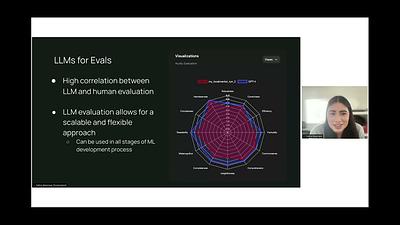

This talk explores the process of efficiently fine-tuning and serving open-source Large Language Models (LLMs). Alex Sherstinsky introduces LoRA (Low-Rank Adaptation) as a parameter-efficient fine-tuning technique that allows for customization of LLMs with significantly fewer trainable parameters. The presentation covers the challenges of using commercial LLMs like GPT-4, such as cost and lack of ownership, and advocates for the benefits of fine-tuning open-source models for specific tasks. It delves into the technical aspects of fine-tuning using frameworks like Ludwig and efficient model serving with Lorax, demonstrating a practical code walkthrough on fine-tuning three adapters for customer support scenarios using the Mistral 7B model. The talk highlights how fine-tuned models can achieve comparable or superior performance to commercial LLMs at a fraction of the cost, with a focus on practical applications and the importance of model serving in production environments.

Up Next